Introduction

When we create software, it’s important to make sure it works well, even when many people use it at the same time. This is called performance testing. In this blog, I will talk about a big project I was working for and we tested software to make sure it can handle lots of useage. We’ll share how we prepared, what we did, and what we learned.

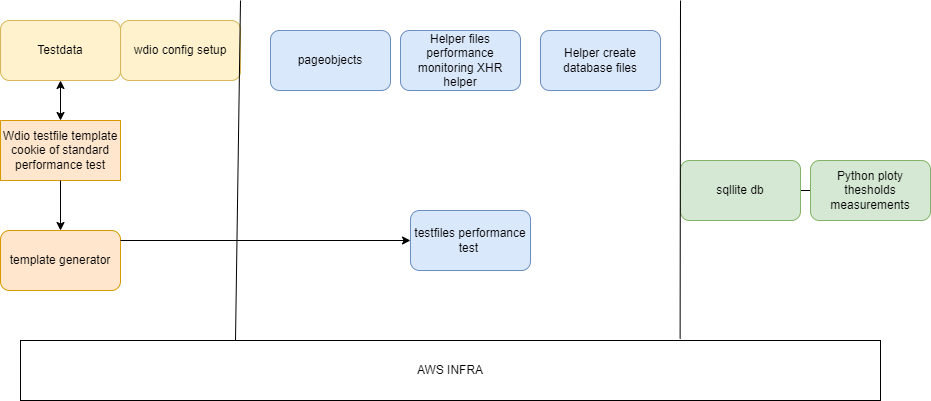

Entry criteria Process Output

Entry Criteria: Preparing for the Test

-

Test Data: testdata is generated from csv files, the challenge was also as we were in a preprod environment we need to manage the jws session somehow to do a bulk user iu test, so every night we are building cookies in gitlab ci for the next data.

-

Test Generator Scripts: The testdata scripts we use to generate the participants or employers with in the templates. Webdriverio scripts can be used best in parallel if you put them in the certain folder so you can align this configuration in this setup.

-

Test Templates: The templates have regular expressions to handles variable names in which the data needed can be inserted in to the template copy and setups a unique test . Execution: Running the Tests

-

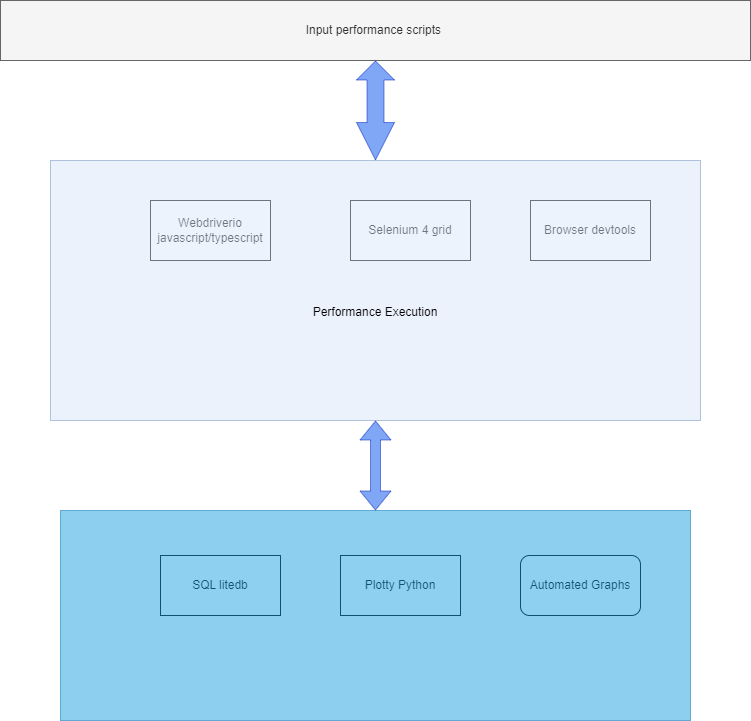

The test itself will start based on the resource power of the gitlab runners which are actually docker containers. This will make sure they have enough resource power to handle a certain amount of vusers. When starting a script it can be determined that you can run via Chrome, Edge, Firefox or safari. The script integrates with devtools and xhr helper, which I explained in this document is a helper function to monitoring the performance of a testscript. The monitoring it self will be stored in a sql lite database which can be used for analytic purposes like in python or javascript graphical tools. Python has a huge amount of libs for this so that was the best choice to setup thresholds and plots

-

Highlight any specific challenges encountered during this phase and how they were overcome.

Output: Analyzing the Results

-

SQL lite: the decision to use SQL lite for storing test results is cost driven and is isolated and could be easily managed in S3, but any other database could also be used to measure the performance in a more centralized way and how it facilitated the analysis.

-

Plotty and Python: Describe how Plotty, in conjunction with Python, was used to visualize test outcomes and what insights were gained from this visualization. CI/CD Integration: Streamlining with GitLab CI

-

Integrating the testing framework into our CI/CD pipeline with GitLab CI was a strategic move. This setup allowed us to configure variables for launching performance tests on AWS Fargate at scale, including the number of browser instances and scaling options. Continuous monitoring at the browser level on both functional and performance aspects was facilitated, significantly enhancing our testing efficiency. The adaptability of our testing framework can also be changed to other cloud environments, such as Azure. By leveraging Kubernetes and Azure DevOps, we could potentially orchestrate our performance tests across a more diverse set of infrastructures. This flexibility would enable us to deploy Selenium Grid in Kubernetes clusters, offering a robust platform for executing browser-based tests. Such an environment would not only enhance our testing capabilities but also align with best practices for cloud-native development, ensuring our testing processes are both scalable and resilient. Whether it’s through AWS Fargate or Kubernetes on Azure or Google cloud, the goal remains the same: to conduct comprehensive performance testing UI that ensures software reliability under any user load. This adaptability is key to navigating the complexities of modern software development and ensuring our applications perform optimally, regardless of the deployment environment.

-

This integration facilitates continuous monitoring on browser level on functional api aspects as well as performance